TurboTLS: TLS connection establishment with 1 less round trip

“A fast padlock rocket from a distance in the style of cyber punk” by DALL-E2.

Expert readers already familiar with transport and security protocols should skip to Summary for Expert Readers, at the bottom.

TurboTLS: TLS connection establishment with 1 less round trip

What proportion of network data is encrypted? Today it stands at a little over 95%, by the best estimates. A decade ago, Google estimated it was less than 50%. If that improvement sounds impressive, just consider that over those 10 years, the volume of internet traffic has increased from ~0.5 exabytes to ~300 exabytes per month.

When we hear the word ‘encryption’, we often leap to thoughts of secure messaging apps like WhatsApp or Signal, as they regularly feature in headline news regarding the latest ‘back door’ legislation. But the reality is that almost all of your internet usage is encrypted, authenticated, or managed somehow by cryptographic algorithms, ensuring that a secure connection is established whenever you load a webpage. The protocols for establishing those connections often contribute to the total latency you experience as a user, that is, the total time you have to wait before the page is loaded. In this blog we are going to describe a new innovation from SandboxAQ that can cut that latency in half: TurboTLS.

Transport

The internet runs over a series of protocols, from the lowest-level parsers of 0/1 electrical signals, up to ones that move application data around your computer. But we’ll start with what we call the transport layer. The protocols at the transport layer are responsible for sending and receiving internet packets of information between different machines. These protocols are so fundamental to all networking that they are generally handled inside your computer’s kernel, as opposed to within customisable software or applications.

The route a packet takes is often circuitous and beset by many challenges. Packets pass through many physical devices, undergo firewall inspections, traverse noisy channels, and ultimately should arrive at a server to deliver their payload. If a packet does not arrive, it is said to be ‘dropped’. Dropped packets are identified by tracking the packet numbers of those arriving before and after it — a missing number in the sequence implies a dropped packet.

Transport Protocols

TCP

To overcome these transport challenges we can use the “Transport Control Protocol” (TCP). In order to establish a stable connection between client and server, a three-way handshake—Syn, Syn-Ack, Ack—and the general understanding is that the server acknowledges which packets it has received periodically, re-requests any which appear to have dropped, and reorders them when they arrive out of sequence. The user communicating via the TCP protocol no longer has to worry about the messy details of sending packets over the internet. This convenience has led to TCP controlling the transport of the majority of web traffic! But waiting around for regular acknowledgements has a cost, and sometimes speed is more important than reliability. So we turn to an alternative: UDP.

UDP

For real-time applications such as phone calls, it might be okay if the line goes a bit crackly or drops for a few seconds or, at the very least, it would not help the situation to process received packets that are more than a fraction of a second late. The conversation has moved on and you probably understood the context of the discussion based on the packets that made it through. For applications like these it isn’t practical to wait for Acks. Instead, Universal Datagram Protocol (UDP) sends packets with no way of knowing whether they were received or not. To harness the benefits of UDP, protocols must either live with the reliability downsides, or implement their own processing for packet reordering, establishing connections, etc.

Security

Having established the manner by which you want to send/receive packets, the next step is to incorporate security. The most pervasive protocol is Transport Layer Security (TLS), which is indicated by the padlock in your address bar and runs over TCP. Once the TCP connection is set up, a handshaking process begins between the client and server, during which cryptography is used to verify the server’s (and sometimes the client’s) identity, as well as to exchange keys for encrypting the remainder of the session. In total, this process usually costs two round trips (the TCP handshake and the TLS handshake).

Another approach is a protocol called QUIC, initially developed by Google, which runs over UDP, has TLS baked directly into the protocol, and handles the connection establishment internally, including packet ordering, acknowledgement, packet dropping, etc. As of 2018, QUIC accounted for around 8% of the internet, of which 98% is Google traffic. Because it runs over UDP and doesn’t need to wait for a full round trip before moving onto the security setup, the connection establishment is at least one round trip quicker than TLS over TCP. This advantage is minor for local connections, but is amplified for long distance connections and for scenarios where many connections are established for a particular application. Even loading a simple web page can involve as many as 10 TLS connections.

So why doesn’t everyone use QUIC? Despite obvious benefits, many providers such as Palo Alto Networks, Cisco, and others block QUIC due to their inability to inspect packets (and thus verify they are not carrying malware). Meanwhile TLS suffers none of these compatibility issues, and enjoys friendly treatment almost everywhere. The natural question then is:

Why can’t we have the best of both?

You mean run TLS, a protocol which runs on TCP, over UDP?’ Not entirely, but almost. Let’s first break down secure connections into two parts. Connection establishment: in this stage, a client and server find one another (e.g., by DNS resolver or directly via IP address), authenticate themselves, and exchange session keys which are later used to bulk encrypt application data. This is all setup for secure communication, and is where the public key cryptography goes. Session: This concerns the actual communication of application data between client and server, with the knowledge that only authenticated parties are able to read the communications. This is encrypted via symmetric cryptography under the shared keys which were exchanged during setup.

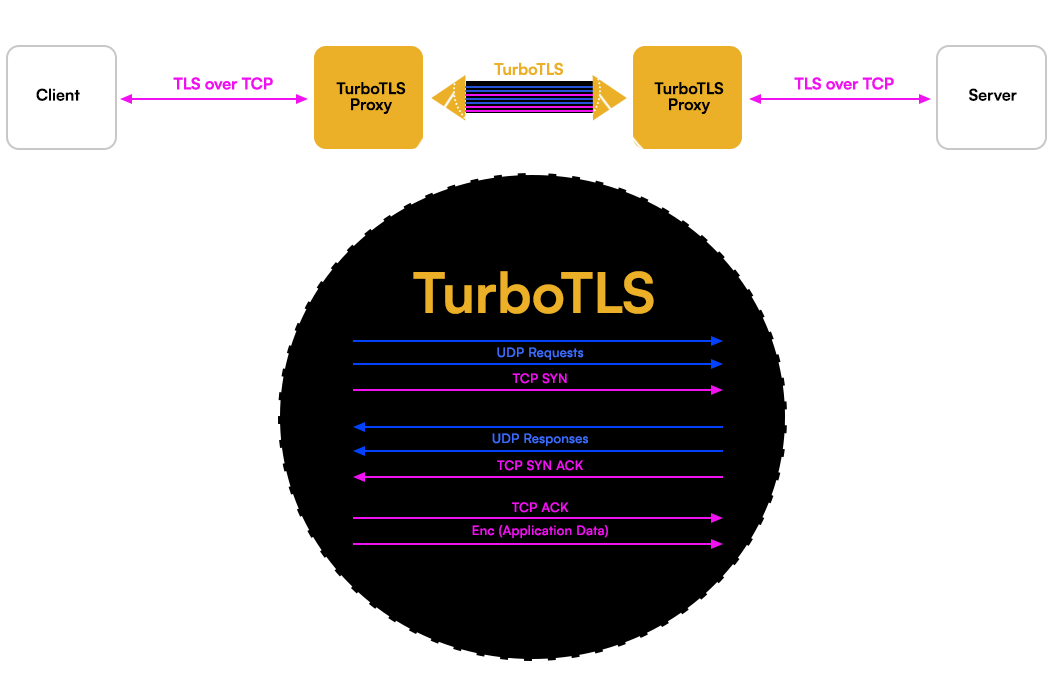

We observed that by modifying TLS, it is possible to perform connection establishment over UDP (to remove a round trip) while waiting for our TCP handshake to occur in parallel. Next we switch to TCP to continue the session portion as normal. In essence, we turbo-charge the connection establishment, then revert to standard procedure for the session. We call this approach TurboTLS.

FAQ

Is this secure? Can something go wrong with merging two transport protocols underneath a security protocol? To demonstrate security, we’ll show that a client and server can interact over TLS without ever knowing it’s TurboTLS: A middlebox intercepts the client connection establishment request, converts it to UDP, then forwards it. Similarly, a reverse proxy can convert the request back before forwarding to a server. In principle, adversaries could be doing this to our TLS traffic today without compromising the security goals of TLS, so TurboTLS’s alternative network flows don’t weaken security.

Aren’t there already other optimizations like this out there? There are many techniques to optimize secure channel protocols, like TCP Fast Open, MinimaLT, TLS False Start, OPTLS, DTLS, and others. Some are appropriate for certain applications but not others, some struggle for adoption due to significant infrastructural changes required, and some change the TLS state machine and thus alter the security model. We discuss how TurboTLS fits into the landscape further in our full paper.

Won’t traffic get filtered or blocked somewhere and have to re-initiate with a different protocol? That might be true in some cases, but it won’t affect performance: we still send the TCP SYN at the same time as the first client-to-server packet. This way, if the UDP Client Hello gets lost, TLS still proceeds as it normally would, without any delay. The latency’s upper bound is limited by what you would have done anyway. In total, the Client then waits a small amount of time extra for the Server response (presuming it received a UDP request), and reverts to the TCP method at a cutoff time.

If the Server Hello is huge, such as the case with post-quantum cryptography, won’t the UDP packets be filtered by firewalls trying to prevent DDoS amplification attacks? We implemented Client request-based fragmentation to overcome this. To maintain a 1-1 correspondence of request packets to response packets, the Client just sends some extra empty UDP packets in the initial flow, which allows firewalls to see and infer that there is an established communication between the client and the server.

How will you know a Server supports TurboTLS? Include a flag in its DNS ‘HTTPS’ record! TurboTLS requests should only be sent if the client knows that the server supports TurboTLS.

Okay, fine, but is this improvement actually valuable?

Transparent Proxying

There is momentum towards transitioning to QUIC for good reasons. It is a well-engineered protocol with fast connection establishment. However, upgrading systems is a complex process likely to take a significant amount of time. This is especially true in the case that we see a worldwide migration. There are other reasons to not switch to QUIC. For example, a need for firewalls to maintain greater visibility and easier packet inspection.

TurboTLS can be implemented as a transparent proxy via middleboxes, or even as a software daemon, without clients or servers requiring upgrades. This allows current TLS users to benefit from fast connection establishment - which is a primary benefit of QUIC - without the hassle of complex upgrade processes or yielding on security grounds which are, for some, an argument to block QUIC.

Transparent TurboTLS Proxy.

Results

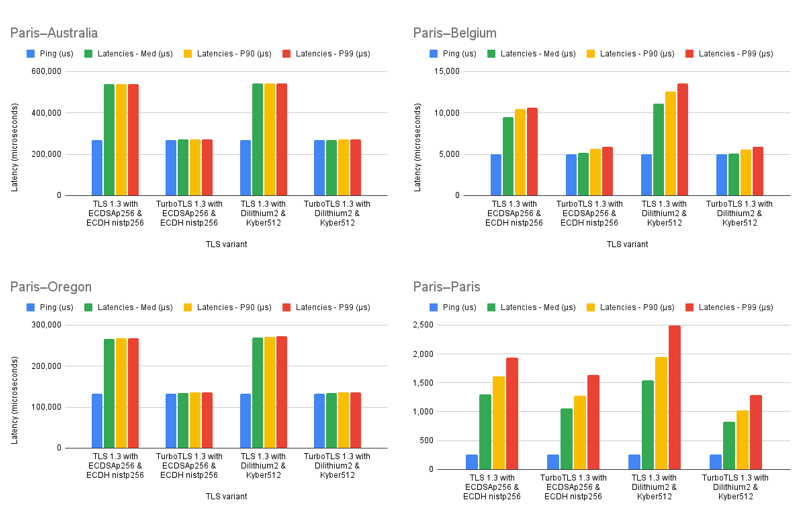

As our experiments show, the latency of establishing a connection is a function of two factors: the time the Client/Server cumulatively takes to perform the necessary cryptographic operations, and the time to send information over the network. For nearby connections, as one would expect, the latency is dominated by the computational costs because round trip times are so low. For example, in the Paris-Paris example, featuring a client and server both within the same city, one sees just a small improvement moving from TLS to TurboTLS. At the other extreme, latencies for intercontinental connections like the Paris-Australia or Paris-Oregon examples are dominated by the time packets spend traveling over the network. In these cases we observe close to a 50% decrease in latency, down to a tiny fraction over the ping time (one round trip with no computations at all).

However, most connections are not intercontinental. Paris-Belgium represents a national-distance connection, somewhat common over the internet today, and the significant improvements seen in that case motivate the value of the TurboTLS protocol.

TurboTLS speedtest.

As noted above, the fact that TurboTLS just changes the way TLS is transported implies that this performance boost can be obtained without the need to modify the code of existing applications. TurboTLS allows users to reap one of the main benefits of QUIC with almost no effort, everywhere, today.

Summary for Expert Readers

In a recent paper we proposed TurboTLS, a simple modification of the Transport Layer Security (TLS) protocol, using client-based UDP fragmentation to exchange the Client Hello and Server Hello messages of a TLS connection. This UDP exchange is done in parallel with a TCP handshake and then the rest of the TLS protocol is run over the TCP connection. With this modification, we obtain TLS over TCP connections in one round-trip with a natural fallback mechanism: if any of the UDP datagrams are lost or blocked, the client will continue the normal TLS over TCP protocol as if it never tried to use TurboTLS, with no latency penalty.

The performance results (see below) show that TurboTLS halves the TLS handshake latency over long distances (and by extension we suppose over any high latency connection), which is the environment in which a user would care most about latency. This can be especially important in situations in which multiple TLS connections are initiated sequentially (e.g., the NY Times homepage requires quite a few successive TLS connections).

Of course, a person skilled in the art of networking security will have a natural question: why should we migrate to TurboTLS if QUIC already provides a one round-trip solution over UDP and many other advantages such as connection multiplexing? In some cases using QUIC is just not possible (we refer the reader to the paper for a precise argumentation). But even if we consider that one day QUIC will ubiquitously replace TLS, there is one reason to use TurboTLS in parallel with QUIC during the transition period.

As illustrated below, TurboTLS only modifies how the TLS packets are transported and nothing else, unlike QUIC. This implies that migrating an application from TLS to TurboTLS can be done without modifying the application itself, by a transparent (local or non-local) proxy that replaces the way TLS messages are transported. So, users can benefit from one round-trip TLS without having to modify the application code. TurboTLS allows users to reap one of the main benefits of QUIC at almost no effort, everywhere, today.