Federated Learning for Enhanced Network Security

" A painted depiction of space teams from different continents exploring the Moon for water resources in federated learning settings without disclosing privacy, inspired by Belgian artist Magritte!" by DALL-E 3.

Introduction

Artificial Intelligence (AI) has revolutionized our approach to data in the context of AI algorithms. While this data can be valuable for mining and discovering patterns, it also introduces privacy and security risks [1]. One approach to address this issue is through the lens of Federated learning (FL) [2]. In the context of network security, FL facilitates collaboration among different parties for jointly training security models. This allows organizations to contribute their expertise without disclosing sensitive details of the underlying data.

The collaborative nature of FL can address critical challenges, supporting real-time vulnerability analysis, anomaly detection, and the development of robust security incident response systems. By gathering insights from various entities without exposing the underlying raw data, FL can strengthen the overall security of network traffic, maintaining a balance between implementing advanced AI techniques and the pressing need for more robust network security [3].

Federated Learning as a Solution

In situations where there’s insufficient training data, the collection of data from multiple parties to train centralized AI models may not always be feasible or desirable, as not every participant will always be willing to share information with untrusted entities. FL emerges as a solution in the field of AI, enabling collaboration among distributed nodes while preserving individual privacy. This approach involves sharing model parameters among participants, either through a central server or cyclically to aggregate local AI model parameters into a global model.

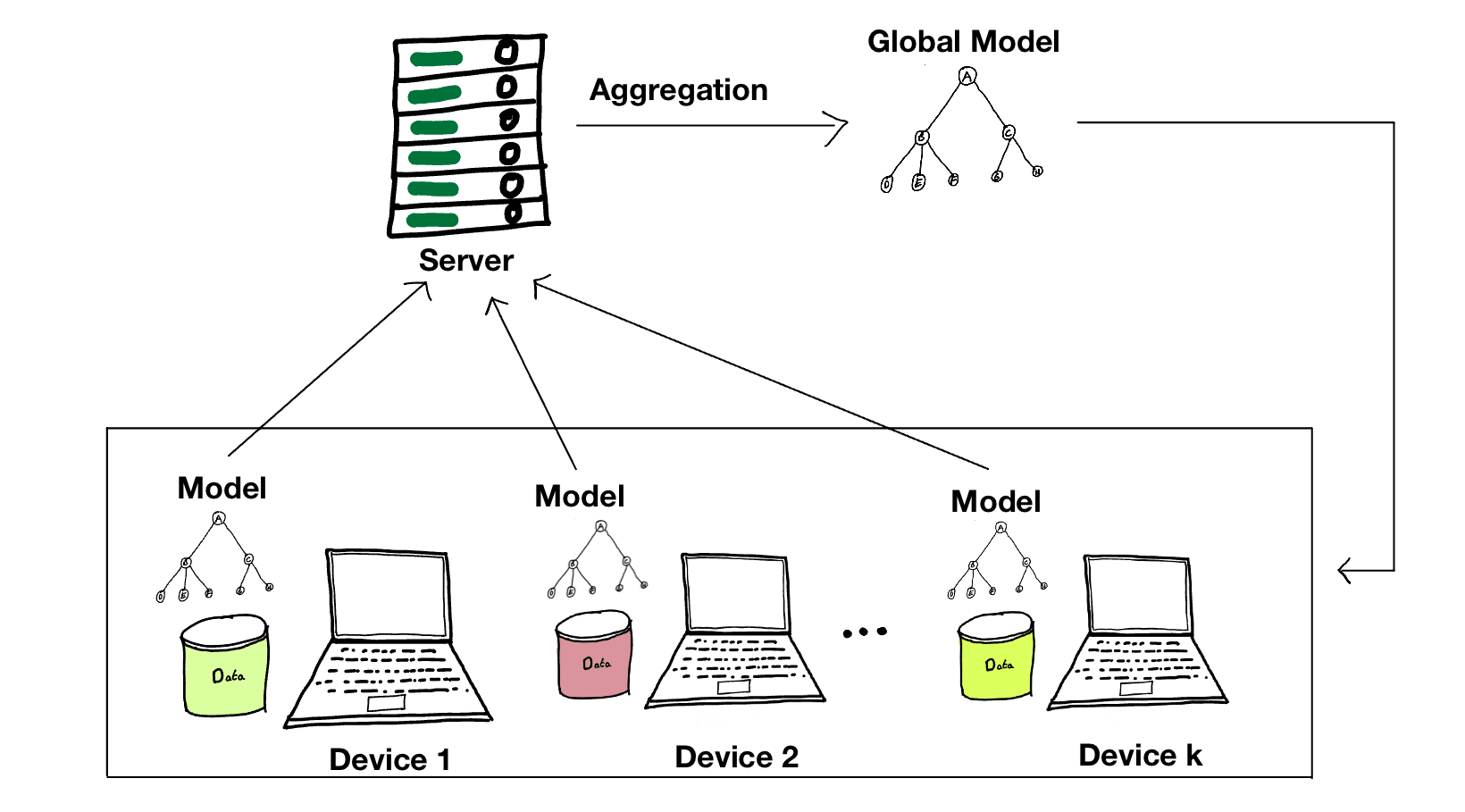

“Typical centralized federated learning workflow using a tree-based ML model as an example

The model trained with FL can be a regression model, a decision tree, a neural network, or any architecture with trainable parameters. A central server sends a copy of an initialized global model to participating clients during each round of training. Each client then trains this model copy on its data locally and sends only its model updates (i.e., parameter updates) back to the central server. Once all model updates are collected, the central server updates the global model using these local updates from clients, concluding one round of FL training. Figure 1 depicts an example of a round of FL in action.

FL offers several advantages over traditional centralized trained AI models. It not only avoids excessive communication of clients’ sensitive information, but it also removes the burden of transferring massive datasets. Since the learning paradigm is distributed, FL also opens doors for continuous collaborative learning and training, provided processes for collecting and serving new data are secure.

FL also has great potential to transform many fields, especially those with particularly sensitive risks or concerns around the leakage of confidential data. The benefits span from healthcare, telecommunication, and transportation to finance, retail, and e-commerce. For instance, hospitals can collaborate on training a global model from patient data without moving or centralizing sensitive data. In the financial industry, clients can benefit from enhanced fraud detection and risk assessment through aggregated insights from multiple institutions without putting customer data at risk [4, 5].

Federated Learning Settings

In FL, there are two primary models or paradigms, distinguished by the nature of the participating clients: cross-device and cross-silo. The cross-device model involves clients distributed across small devices, such as mobile devices. On the other hand, cross-silo settings involve participants from large organizations or companies, such as hospitals and banks, typically with a limited number of participants but larger computational resources [6].

FL emerges as a promising candidate for cooperative innovation across a variety of domains and organizations, without compromising data integrity. Imagine a wide pool of entities - financial institutions, healthcare providers, or network operators - retaining control over their data while extracting knowledge from the data silos. For instance, network traffic analysis is a crucial component of network infrastructures. However, a strong network security AI model needs a diverse set of data to have a robust performance [7]. FL acts as a conduit between institutions such as banks and insurance companies, allowing them to jointly train detection models on network traffic without exposing customer’s traffic to other participants. This new paradigm enables diverse entities to unite and train models for mutual benefit. It enriches collaborative knowledge while upholding the privacy of each siloed entity.

Note however that FL brings with it the natural complexities of distributed systems with entities that may have divergent goals and priorities. As the data manipulated by the entities is potentially private (e.g. customer, employee or business operations data), one must also consider potential security issues between entities. Thus, designing FL protocols can be challenging and the known solutions induce non negligible costs [7].

Security and Privacy for FL

Involving multiple clients in collaborative training, and exposing the result of their computation (the model parameters) can lead to attacks. Practitioners and researchers have been concerned about the security and privacy of this distributed learning paradigm since its early days [8].

The leading algorithms for ensuring data privacy in FL fall into two categories: encryption and perturbation.

There are two mainstream encryption-based approaches for secure and private FL: Homomorphic Encryption (HE), which enables computations on encrypted data by a single entity that is not able to decrypt but is nevertheless able to compute some functions over the data, and secure Multi-party Computation (MPC), which relies on trust separation between multiple entities that jointly compute a function over their inputs while keeping those inputs private [9]. By designing secure aggregation protocols and leveraging encryption, global model updates can be combined from different nodes without exposing the individual model updates that each party would have computed alone [10].

On the other hand, perturbation-based models such as differential privacy (DP) introduce controlled noise to model parameters before sharing updates. This ensures allows participants to contribute without revealing exactly the update they would have done, which prevents some inference attacks on the participants’ input data. There are two ways to guarantee DP: perturb the local model with noise on the client-side or introduce noise after aggregating model weights on the central server [11].

FL Applications in the Cybersecurity Domain

FL holds significant potential for various network domains to increase the efficiency of network operations while protecting data privacy [12]. In telecommunications, network maintenance costs amount to billions of dollars for operating companies. FL can enhance predictive maintenance by allowing distributed edge devices like routers and switches to collaboratively learn patterns and predict potential network failures [13]. These devices can indeed compute model updates locally based on their local observations, sending only aggregated insights to a central server.

IoT devices and vehicular mobility networks can also benefit greatly from FL in regards to efficient energy management or real-time monitoring systems [14]. In smart cities, for example, edge devices such as sensors and cameras can collaborate to improve model forecasts for traffic optimization or environmental monitoring.

FL also shows promise in enhancing network security within the same organization or a cross-organizational environment, enabling collaborative learning to identify potential threats [15]. This facilitates the detection of emerging threats, anomalies, or attack patterns. FL allows the network to evolve and respond dynamically to adversaries, thereby enhancing the overall resilience of the network infrastructure [16]. Integrating FL into network security strategies ultimately empowers network operators to proactively defend their systems against cyber threats, strengthening the overall security posture of their networks while minimizing concerns related to data exposure and privacy breaches.

Whether applied in telecommunications, cybersecurity, IoT, or vehicular networks, FL can be transformative at scale, fostering collaboration and intelligence across distributed entities within the network.

Challenges and Future Directions

Although FL offers major advantages for distributed and privacy-preserving AI, it comes with several limitations and open research directions. Two notable challenges are data heterogeneity and communication overhead [17].

In real-world scenarios, local data are typically not independent and identically distributed (IID). This sort of data heterogeneity poses issues for FL, affecting the convergence speed of the global model and its performance on local data. Beyond poor generalization, data heterogeneity can adversely impact model personalization, which aims to personalize models based on local data while also having good generalization on global models. This personalization is a promising research direction as a solution to non-IID data and generalizability of the FL concept. One potential solution for personalized FL, would be that each client uses information from the central server but employs a tailored objective to conduct local training to develop its individualized models [18].

FL works by transmitting model parameters between clients’ local servers and the central server through successive rounds of training. In the case of frequent model updates, FL can also suffer from communication overhead that influences the efficiency and performance of collaborative model training. When local data distributions are non-IID, additional communication is required between clients and the server to synchronize model updates and ensure convergence. This leads to increased latency and bandwidth usage. Mitigating communication overhead in FL involves optimizing communication strategies, exploring compression techniques for model updates, and designing algorithms that minimize the amount and frequency of information exchanged while ensuring convergence [19]. Striking a balance between effective collaboration and reducing communication burden is essential for the successful implementation of FL, particularly in scenarios where network resources are limited.

Conclusion

In summary, FL can play a vital role in the collective and privacy-preserving learning paradigm, especially in network applications. By decentralizing the model training without transferring training datasets, FL not only enhances predictive maintenance, cybersecurity, and resource optimization but also preserves individual data privacy. In recent years, FL has made great strides and received growing attention from both academia and industry. This is natural, as FL offers a range of advantages, from improved network reliability to compliance with privacy regulations, shaping the future of intelligent, secure, and efficient network applications. As institutions and operating companies increasingly adopt FL, we anticipate a landscape where collaborative learning becomes synonymous with network resilience, fostering intelligent yet privacy-aware digital ecosystems.

References

[1] Papernot, N., McDaniel, P., Sinha, A., & Wellman, M. P. (2018, April). Sok: Security and privacy in machine learning. In 2018 IEEE European Symposium on Security and Privacy (EuroS&P) (pp. 399-414). IEEE.

[2] McMahan, B., Moore, E., Ramage, D., Hampson, S., & y Arcas, B. A. (2017, April). Communication-efficient learning of deep networks from decentralized data. In Artificial intelligence and statistics (pp. 1273-1282). PMLR.

[3] Ferrag, M. A., Friha, O., Maglaras, L., Janicke, H., & Shu, L. (2021). Federated deep learning for cyber security in the internet of things: Concepts, applications, and experimental analysis. IEEE Access, 9, 138509-138542.

[4] Zheng, W., Yan, L., Gou, C., & Wang, F. Y. (2021, January). Federated meta-learning for fraudulent credit card detection. In Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence (pp. 4654-4660).

[5] Yang, W., Zhang, Y., Ye, K., Li, L., & Xu, C. Z. (2019). Ffd: A federated learning based method for credit card fraud detection. In Big Data–BigData 2019: 8th International Congress, Held as Part of the Services Conference Federation, SCF 2019, San Diego, CA, USA, June 25–30, 2019, Proceedings 8 (pp. 18-32). Springer International Publishing.

[6] Huang, C., Huang, J., & Liu, X. (2022). Cross-silo federated learning: Challenges and opportunities. arXiv preprint arXiv:2206.12949.

[7] Ghimire, B., & Rawat, D. B. (2022). Recent advances on federated learning for cybersecurity and cybersecurity for federated learning for internet of things. IEEE Internet of Things Journal, 9(11), 8229-8249.

[8] Mothukuri, V., Parizi, R. M., Pouriyeh, S., Huang, Y., Dehghantanha, A., & Srivastava, G. (2021). A survey on security and privacy of federated learning. Future Generation Computer Systems, 115, 619-640.

[9]Zhang, C., Li, S., Xia, J., Wang, W., Yan, F., & Liu, Y. (2020). {BatchCrypt}: Efficient homomorphic encryption for {Cross-Silo} federated learning. In 2020 USENIX annual technical conference (USENIX ATC 20) (pp. 493-506).

[10] Yin, X., Zhu, Y., & Hu, J. (2021). A comprehensive survey of privacy-preserving federated learning: A taxonomy, review, and future directions. ACM Computing Surveys (CSUR), 54(6), 1-36.

[11] Wei, K., Li, J., Ding, M., Ma, C., Yang, H. H., Farokhi, F., … & Poor, H. V. (2020). Federated learning with differential privacy: Algorithms and performance analysis. IEEE Transactions on Information Forensics and Security, 15, 3454-3469.

[12] Mun, H., & Lee, Y. (2020). Internet traffic classification with federated learning. Electronics, 10(1), 27.

[13] Abdelli, Khouloud, and JOO YEON CHO. “Predictive Maintenance for Optical Networks in Robust Collaborative Learning.” (2021).

[14] Manias, D. M., & Shami, A. (2021). Making a case for federated learning in the internet of vehicles and intelligent transportation systems. IEEE Network, 35(3), 88-94.

[15] Huang, C., Tang, M., Ma, Q., Huang, J., & Liu, X. (2023). Promoting Collaborations in Cross-Silo Federated Learning: Challenges and Opportunities. IEEE Communications Magazine.

[16] Li, J., Tong, X., Liu, J., & Cheng, L. (2023). An efficient federated learning system for network intrusion detection. IEEE Systems Journal.

[17] Kairouz, P., McMahan, H. B., Avent, B., Bellet, A., Bennis, M., Bhagoji, A. N., … & Zhao, S. (2021). Advances and open problems in federated learning. Foundations and Trends® in Machine Learning, 14(1–2), 1-210.

[18] Tan, A. Z., Yu, H., Cui, L., & Yang, Q. (2022). Towards personalized federated learning. IEEE Transactions on Neural Networks and Learning Systems.

[19] Chen, M., Shlezinger, N., Poor, H. V., Eldar, Y. C., & Cui, S. (2021). Communication-efficient federated learning. Proceedings of the National Academy of Sciences, 118(17), e2024789118.